Deep Learning – Image Classification

Interior Wind Noise Prediction And Visual Explanation System For Exterior Vehicle Design Using Combined Convolution Neural Networks

Objective

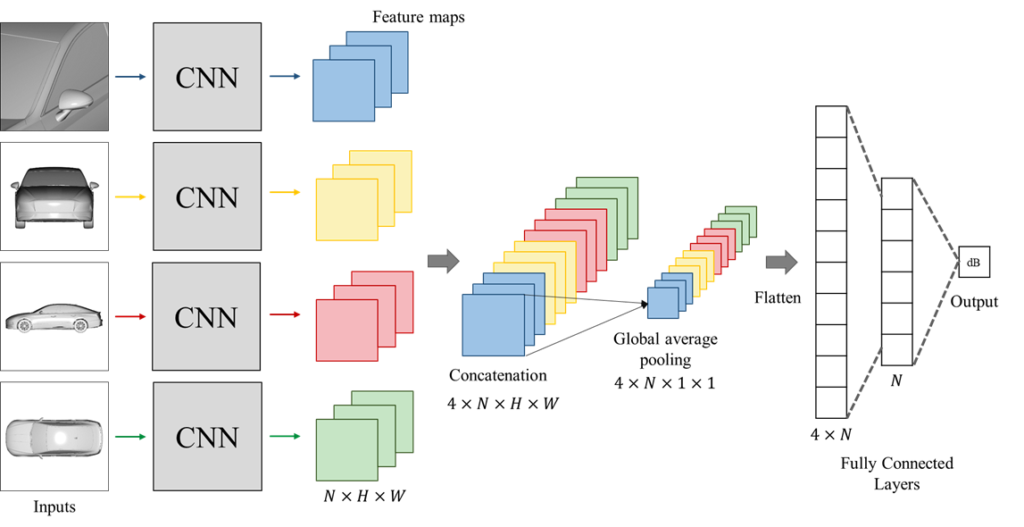

In this study, a convolutional neural network (CNN), which is a class of deep neural networks designed for processing image data, was applied to predict the wind noise with vehicle design images from four different views. The proposed method can predict the wind noise using vehicle images from different views with a root-mean-square error (RMSE) value of 0.206, substantially reducing the time and cost required for interior wind noise estimation.

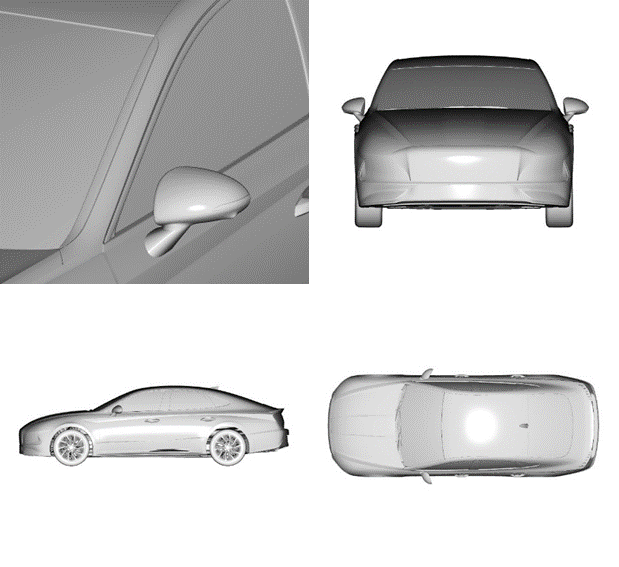

Data

10035 images of a sedan-type vehicle

Proposed Method

Our model combines the feature maps extracted from the CNN models. Consequently, the feature maps are concatenated. Then, the FC neural networks receive them to estimate the wind noise dB. The optimization of the number of hidden layers and neurons in the FC layer is conducted to improve the prediction performance.

Resolution calibration and dual attention module based CNN for thoracic disease classification

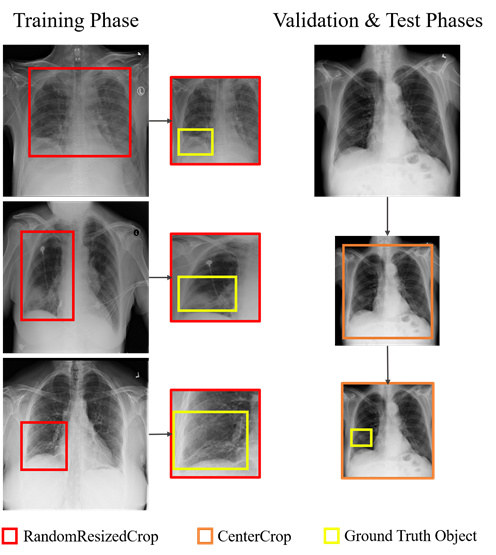

In thoracic disease classification, the original chest X-ray images are high resolution images. Nevertheless, in existing convolution neural network (CNN) models, the original images are resized to 224×224 before use. Diseases in local areas may not be sufficiently represented because the chest X-ray images have been resized, which excessively compresses information. Therefore, a higher resolution is required to focus on the local representations. Based on the large-scale image, previous studies have investigated CNNs with large input resolutions for classification performance improvement. However, using a high resolution input image reduces memory efficiency. This research study the resolution calibration and attention based CNN to counter the inefficiency caused by large input resolution and improve classification performance by adjusting the input size based on the RandomResizedCrop method.

When using RandomResizedCrop in the training phase, an object discrepancy problem occurs between the training and test objects. As depicted in Figure, the original chest X-ray images are cropped using different scale factors 𝜎 and resized to the training input size. By resizing the cropped images according to 𝜎 , objects such as local disease can be sufficiently represented. In contrast, during the validation and test phases, the original chest X-ray images are resized and cropped using CenterCrop method to the same size as that used in the training phase. As a result, object discrepancies occur between the training and testing phases.

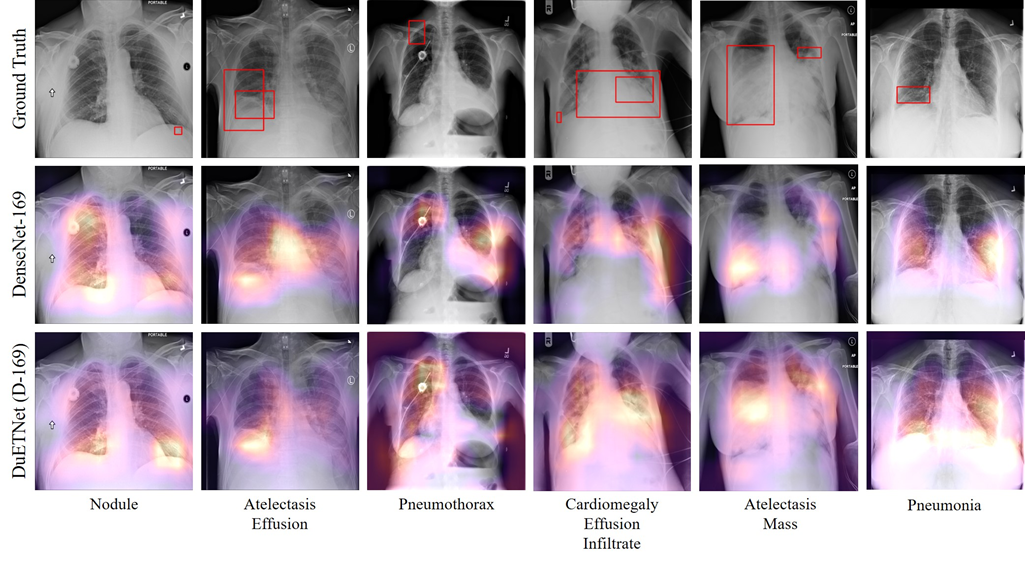

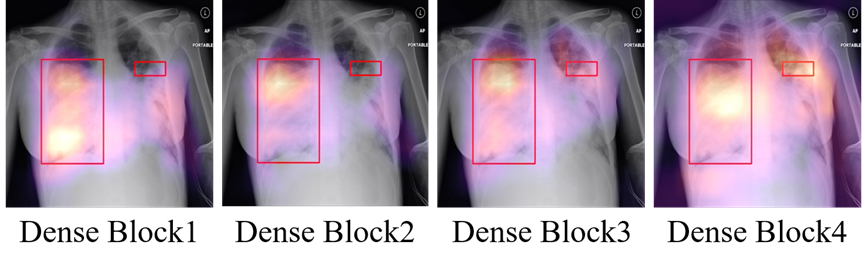

To evaluate the classification performance of the proposed module at the end of each dense block, we visualized the class activation map. The ground truth on the left (mass) was classified effectively for each case. However, the right ground truth on the right (atelectasis) was classified only in the deeper dense block. In addition to the visualization of the class activation map, we experimented with the channel-wise activation of three classes (e.g., the pneumothorax, atelectasis, and nodule classes) in each dense block.